GalLoP: Learning Global and Local Prompts for Vision-Language Models

Published in European Conference on Computer Vision (ECCV 2024), 2024

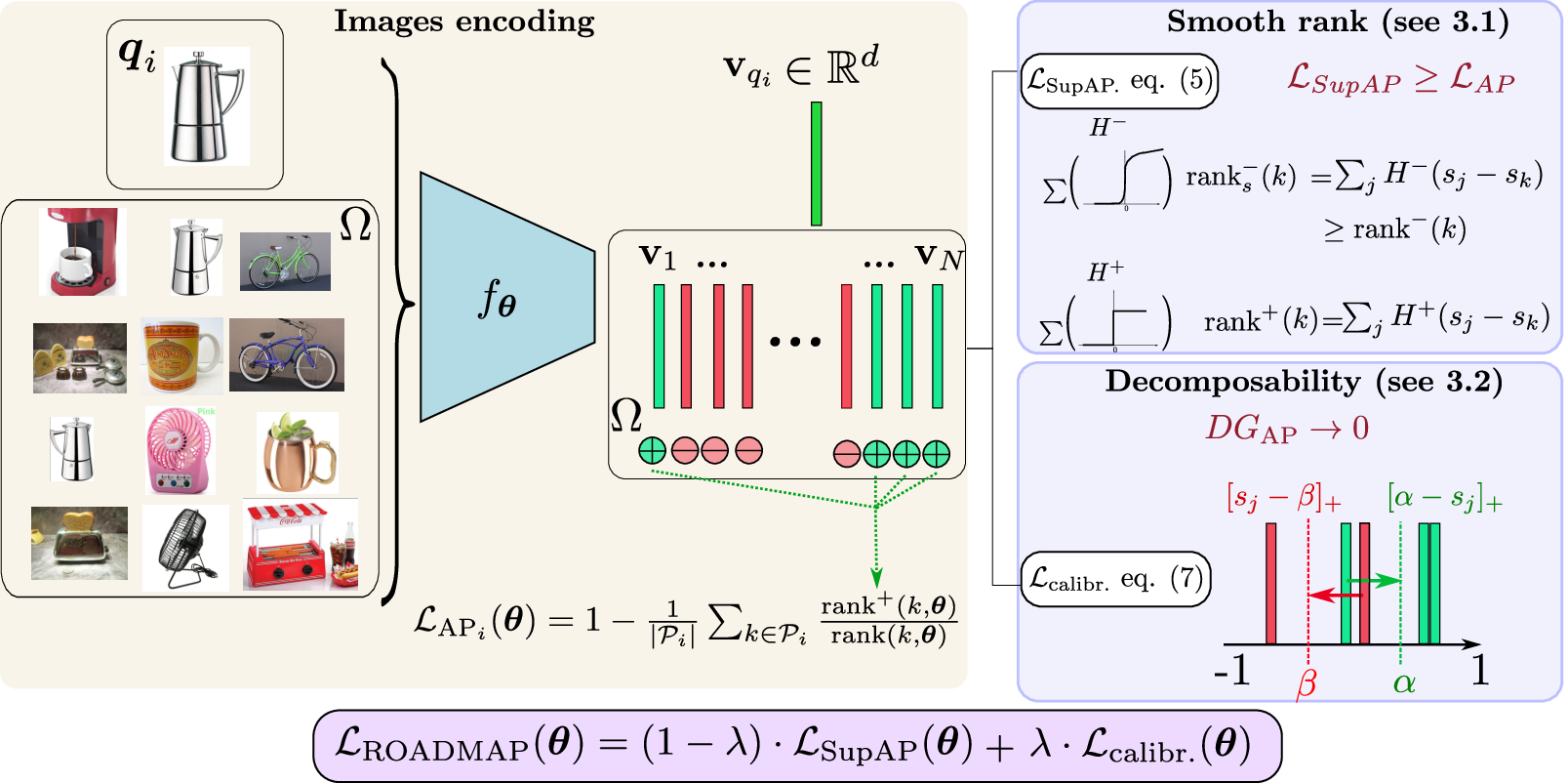

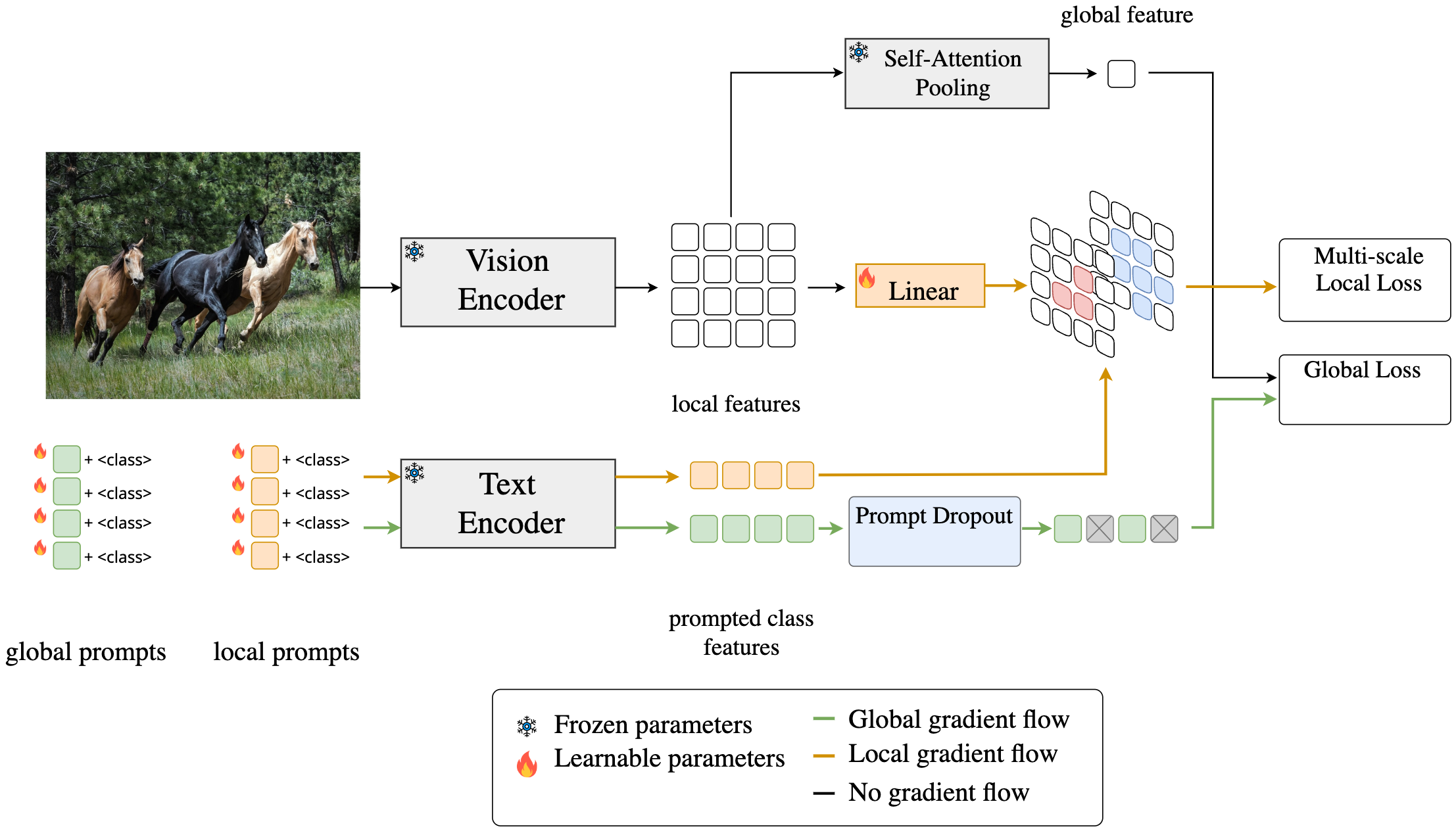

Prompt learning has been widely adopted to efficiently adapt vision-language models (VLMs), e.g. CLIP, for few-shot image classification. Despite their success, most prompt learning methods trade-off between classification accuracy and robustness, e.g. in domain generalization or out-of-distribution (OOD) detection. In this work, we introduce Global-Local Prompts (GalLoP), a new prompt learning method that learns multiple diverse prompts leveraging both global and local visual features. The training of the local prompts relies on local features with an enhanced vision-text alignment. To focus only on pertinent features, this local alignment is coupled with a sparsity strategy in the selection of the local features. We enforce diversity on the set of prompts using a new “prompt dropout” technique and a multiscale strategy on the local prompts. GalLoP outperforms previous prompt learning methods on accuracy on eleven datasets in different few shots settings and with various backbones. Furthermore, GalLoP shows strong robustness performances in both domain generalization and OOD detection, even outperforming dedicated OOD detection methods. Code and instructions to reproduce our results will be open-sourced..

Recommended citation: Marc Lafon, Elias Ramzi, Clément Rambour, Nicolas Audebert, Nicolas Thome: GalLoP: Learning Global and Local Prompts for Vision-Language Models. European Conference on Computer Vision (ECCV 2024). https://arxiv.org/pdf/2407.01400v1